Flexible torch neural network architecture API

Project description

A flexible API for instantiating pytorch neural networks composed of sequential linear layers (torch.nn.Linear). Additionally, makes use of other elements within the torch.nn module.

Test implementation 1: Sequential linear neural network

import flexinet

nn = flexinet.models.NN()

# example

nn = flexinet.models.compose_nn_sequential(in_dim=50,

out_dim=50,

activation_function=Tanh(),

hidden_layer_nodes={1: [500, 500], 2: [500, 500]},

dropout=True,

dropout_probability=0.1,

)

Test implementation 2: vanilla linear VAE

Installation

To install the latest distribution from PYPI:

pip install flexinet

Alternatively, one can install the development version:

git clone https://github.com/mvinyard/flexinet.git; cd flexinet;

pip install -e .

Example

import flexinet as fn

import torch

X = torch.load("X_data.pt")

X_data = fn.pp.random_split(X)

X_data.keys()

dict_keys(['test', 'valid', 'train'])

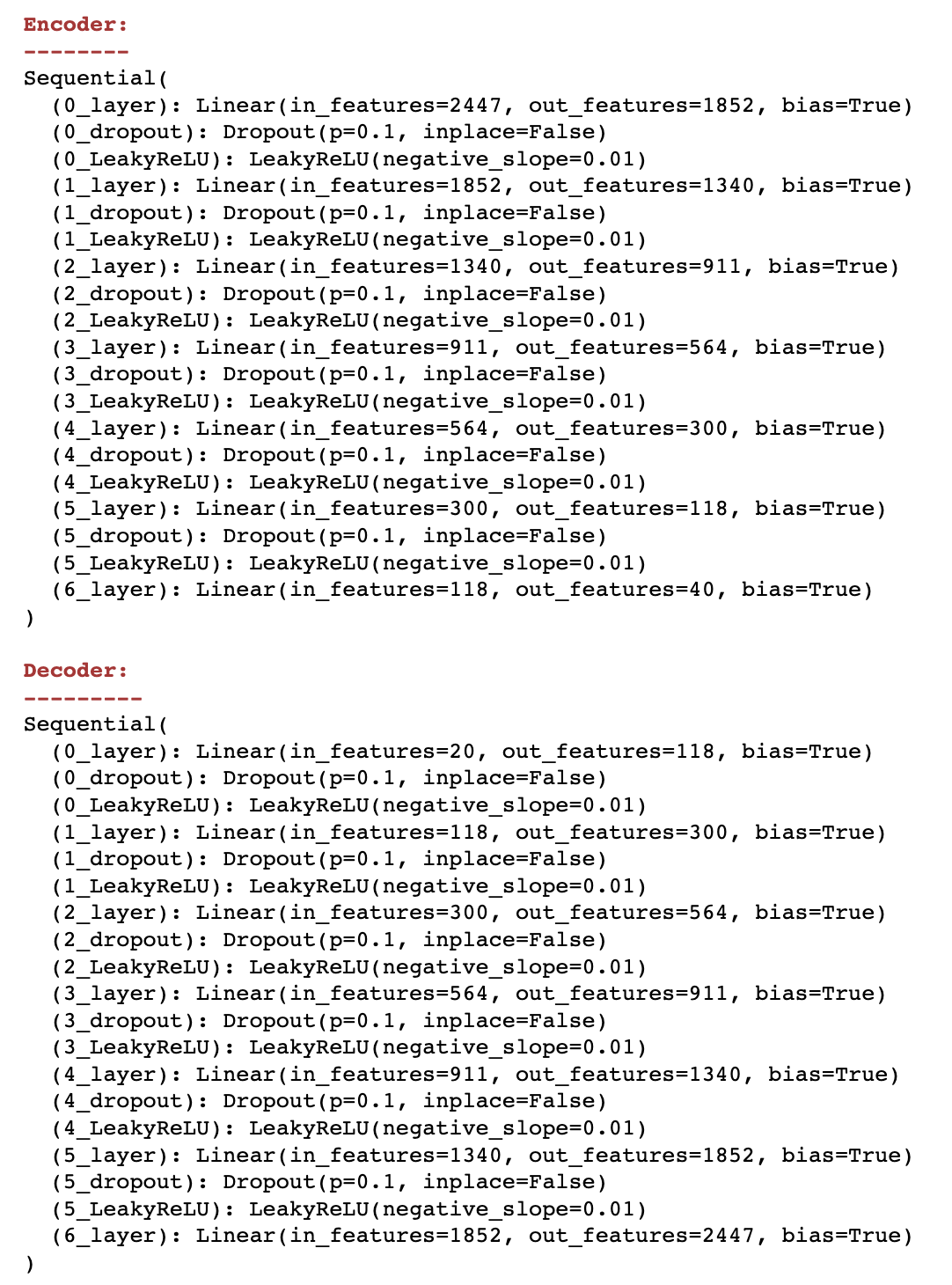

model = fn.models.LinearVAE(X_data,

latent_dim=20,

hidden_layers=5,

power=2,

dropout=0.1,

activation_function_dict={'LeakyReLU': LeakyReLU(negative_slope=0.01)},

optimizer=torch.optim.Adam

reconstruction_loss_function=torch.nn.BCELoss(),

reparameterization_loss_function=torch.nn.KLDivLoss(),

device="cuda:0",

)

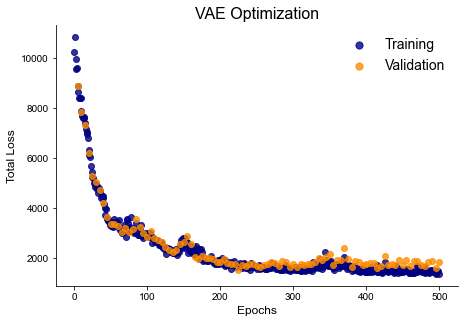

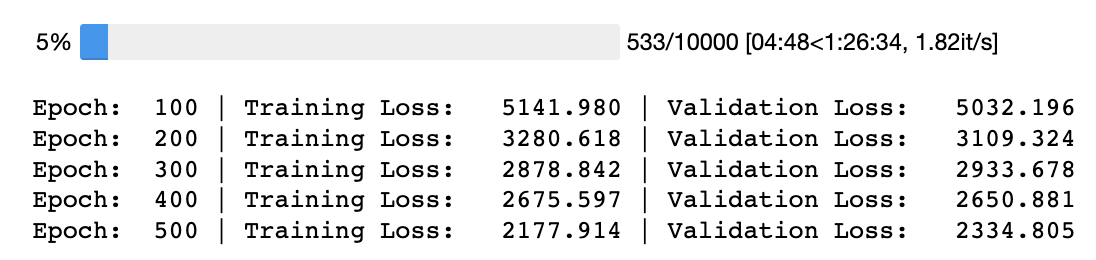

model.train(epochs=10_000, print_frequency=50, lr=1e-4)

model.plot_loss()

Contact

If you have suggestions, questions, or comments, please reach out to Michael Vinyard via email

Project details

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

flexinet-0.0.4.tar.gz

(11.2 kB

view hashes)

Built Distribution

flexinet-0.0.4-py3-none-any.whl

(17.0 kB

view hashes)